Measuring ROI from a Data Annotation Investment

Measuring ROI from a Data Annotation Investment

In the enterprise AI lifecycle, data annotation often appears as a line item—an operational expense that quietly powers model development. But for teams serious about production-grade performance, annotation is not just a cost center. It’s an investment. And like any investment, it must deliver measurable return.

The challenge is this: annotation doesn’t generate revenue directly. Its impact is indirect, influencing model behavior, system stability, compliance adherence, and user outcomes. For leaders overseeing AI deployments, the ability to quantify that impact is what separates tactical labeling from strategic data operations.

In this blog, we break down how to evaluate return on investment (ROI) from annotation projects—linking labeled data quality to model metrics, operational KPIs, and downstream business value.

Why Measuring Annotation ROI Has Been Historically Elusive

Annotation’s influence is clear: models trained on better labels perform better. But attribution is messy. Teams struggle to isolate the effect of labeling quality from model tuning, feature engineering, or infrastructure upgrades. That’s why annotation ROI is often underreported—despite being one of the highest-leverage levers in ML operations.

This lack of visibility creates budgeting problems. Executives underinvest in labeling infrastructure. Data scientists inherit noisy datasets. And when models fail, the cause is traced to the algorithm, not the ground truth it was trained on.

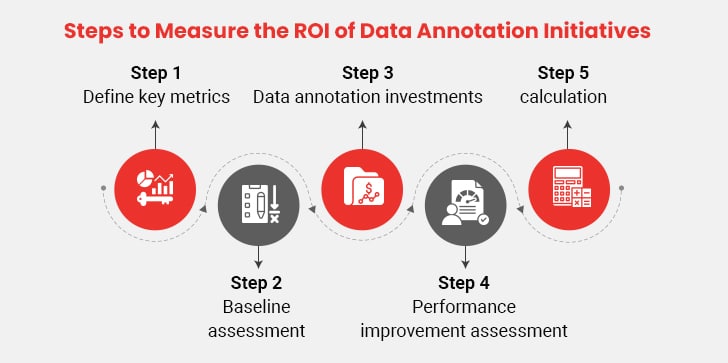

To fix this, organizations need a structured methodology to evaluate annotation ROI—combining model-centric metrics with operational and business KPIs.

Core Framework: Linking Labels to Model Performance

The first ROI lens is model performance. The question here is simple: did the annotation investment improve how the model performs in validation, test, or production environments?

This is measured through:

Precision, recall, F1-score: Compare model scores before and after a labeling effort. Even small increases in recall can deliver disproportionate value in high-volume systems like fraud detection or medical triage.

Error rate reduction: Track how many edge-case failures, false positives, or false negatives were resolved after high-quality annotation cycles or re-labeling efforts.

Confidence score calibration: Well-annotated data helps models not only predict more accurately but also express uncertainty more reliably. This improves model interpretability and downstream decision-making.

Data efficiency: Did the new annotated data enable equivalent model performance with fewer samples? High-quality labels often reduce the amount of training data needed—directly lowering compute and training costs.

When annotation is done right, these metrics move. And when they don’t, that’s a signal that either labeling quality was poor or task-scoping was misaligned.

Operational ROI: How Annotation Drives Efficiency

Beyond model metrics, annotation investment also delivers ROI by improving internal efficiency across teams:

Reduced rework and revision cycles: Clean, well-structured data minimizes time spent debugging misclassified cases or retraining on flawed assumptions.

Faster iteration cycles: When annotation guidelines, tools, and workflows are optimized, model teams get the data they need faster—shortening development sprints.

Improved onboarding and documentation: Strong annotation pipelines create reusable label taxonomies, edge-case libraries, and training sets that future teams can rely on. This accelerates knowledge transfer and reduces startup time on new projects.

Compliance resilience: In regulated domains, annotation pipelines with built-in audit trails and version control reduce the cost and time required to satisfy data lineage, explainability, or privacy requirements.

The result: more time spent on model innovation, less time spent cleaning up labeling messes.

Business-Level ROI: When Better Labels Create Better Products

Ultimately, the most meaningful ROI from annotation shows up not in metrics, but in market outcomes:

Customer experience: For AI systems that interact directly with users—recommendation engines, chatbots, personalization layers—better labeled training data translates to fewer errors, more relevant suggestions, and higher engagement.

Operational cost savings: In automation-driven workflows (like document processing or call center triage), more accurate models reduce manual intervention, speeding up processing and lowering labor costs.

Risk reduction: In AI systems that deal with compliance, credit, or safety decisions, better labels reduce the likelihood of biased outcomes, legal exposure, or regulatory fines.

Revenue impact: In many sectors, more accurate predictions drive real business gains—whether in fraud prevention, ad targeting, or patient stratification. The improved accuracy attributable to annotation can be mapped directly to avoided losses or incremental revenue.

In these scenarios, the annotation investment isn’t just defensible—it becomes self-funding.

Tracking ROI Over Time: What to Measure and Monitor

ROI is not static. As models evolve, data shifts, and tasks get more complex, annotation strategies must adapt—and their value must be tracked continuously.

Teams should implement:

- Annotation cost per usable sample: Not all labels are equal. Measure how much it costs to produce a label that meets your accuracy threshold and supports model lift.

- Model performance per annotation dollar: Track how much model improvement is gained relative to the cost of labeling campaigns or vendor engagements.

- Time to value: How long does it take for a batch of labeled data to result in a deployed model or feature release? Shorter cycles mean faster ROI realization.

- Waste ratios: What percentage of annotated data is rejected, reworked, or never used in production? Lower waste indicates tighter alignment between annotation and model needs.

When these metrics are built into dashboards and OKRs, annotation becomes a managed investment—not an invisible cost.

How FlexiBench Helps Clients Maximize and Measure Annotation ROI

At FlexiBench, we work with enterprise AI teams not just to label data, but to optimize its impact. Our infrastructure is designed to help clients measure ROI across the full annotation lifecycle—from task scoping and quality assurance to model performance benchmarking and cost-efficiency tracking.

We support per-project performance audits, where annotation outcomes are linked to model metrics and business KPIs. Our clients gain visibility into labeling precision, reviewer agreement, task throughput, and contribution to model lift.

We also provide tools for active learning, re-labeling prioritization, and QA feedback loops—all designed to reduce waste, focus annotation on the highest-impact data slices, and shorten the path from labeled data to deployed intelligence.

With FlexiBench, annotation isn’t just a support function. It’s a strategic input that’s monitored, managed, and continuously improved—because ROI only happens when outcomes are measured and optimized.

Conclusion: The Value of Annotation Is Measurable—If You Know Where to Look

In AI development, labeled data isn’t just fuel—it’s leverage. But like any investment, its value depends on how it’s used, managed, and evaluated. When organizations treat annotation as a measurable asset—not a sunk cost—they unlock faster iteration, better models, and more predictable business outcomes.

At FlexiBench, we help AI leaders move from labeling at scale to labeling with strategy. Because when you can measure the impact of every dataset you create, you stop just training models—and start building systems that learn intelligently, perform reliably, and deliver results that matter.

References

Google Research, “Efficient Labeling for High-Impact ML,” 2023 Stanford ML Group, “Quantifying Data Quality and Model Accuracy Linkages,” 2024 McKinsey Analytics, “Enterprise AI: The Economics of Data Annotation,” 2024 FlexiBench Technical Overview, 2024

.png)